CODE_RED

Dagmar Reinhardt, Lian Loke (Sydney, Australia, 2021)

The field of social robotics has brought attention to the social, embodied interaction of humans and robots. As we begin to examine how robots can play a role in personal, bodily grooming and rituals such as the feminine act of applying lipstick, a seemingly simple interaction between the human hand and lip raises challenging ethical and technical issues for human-robot interaction. We pursue a feminist, speculative, critical approach to investigating how robots can collaborate through gesture and touch with humans – where an industrial robot to draw lipstick on a human face.Compared to the fast, deft, repetitive robot motions of industrial robots, the application of robotic arms to acts of delicate, sensuous human gesture and touch requires a closer examination of the expressive dynamics, kinaesthetics, tactility and affective touch of the human-robot interaction. Applying and wearing lipstick is a highly individual act, endowed with personal meaning, yet sharing social and cultural connotations. Through defamiliarising what seems a simple, ordinary act, the process of research investigation has begun to reveal the complexities inherent in personal acts of applying and wearing lipstick; as skilful acts of hand-lipstick-lip coordination. Interestingly, the lips are not passive, but reach towards the hand-lipstick and collaborate in this sensuous, tactile interaction. These varied approaches complexify how to program a robot to perform similar movement paths.

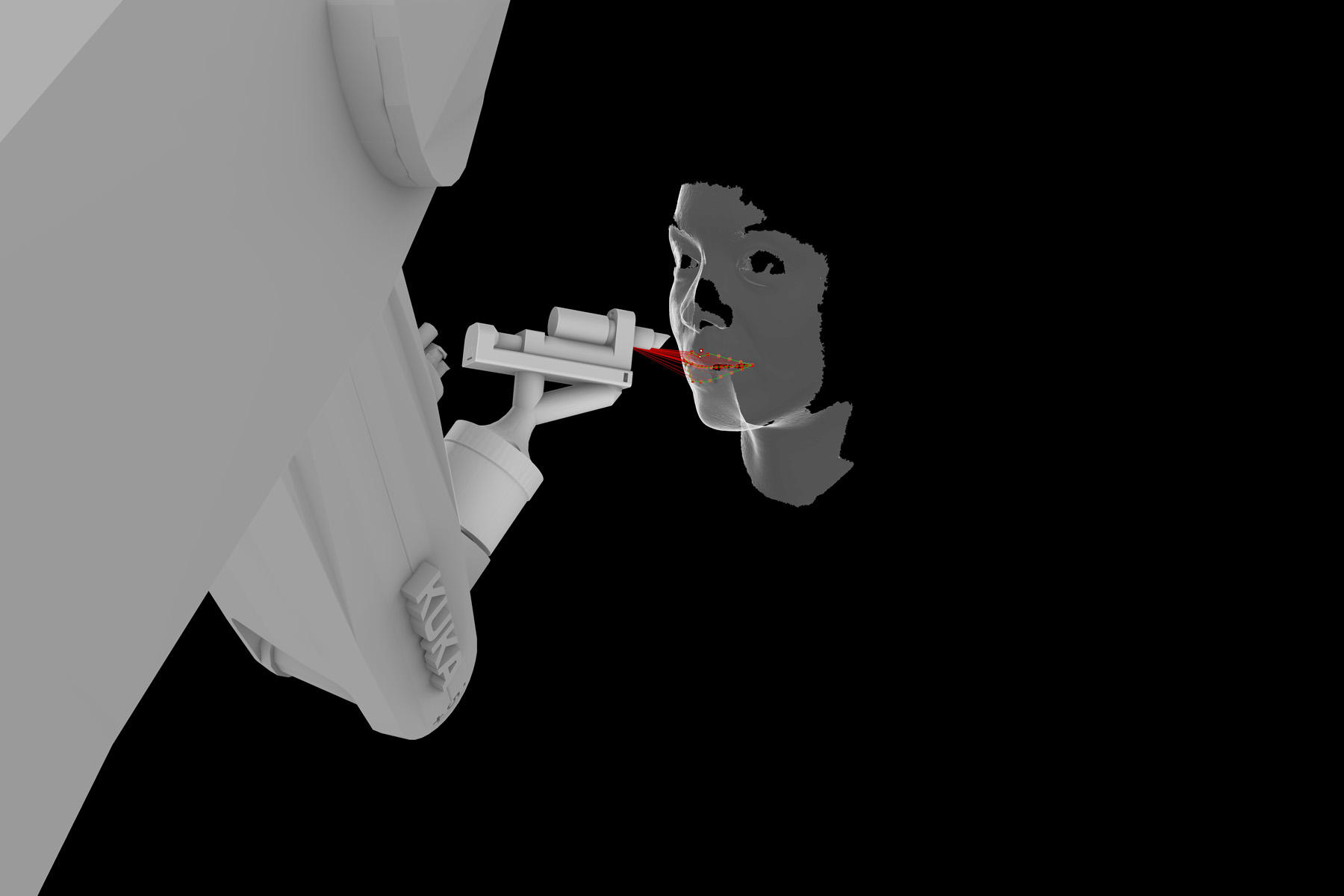

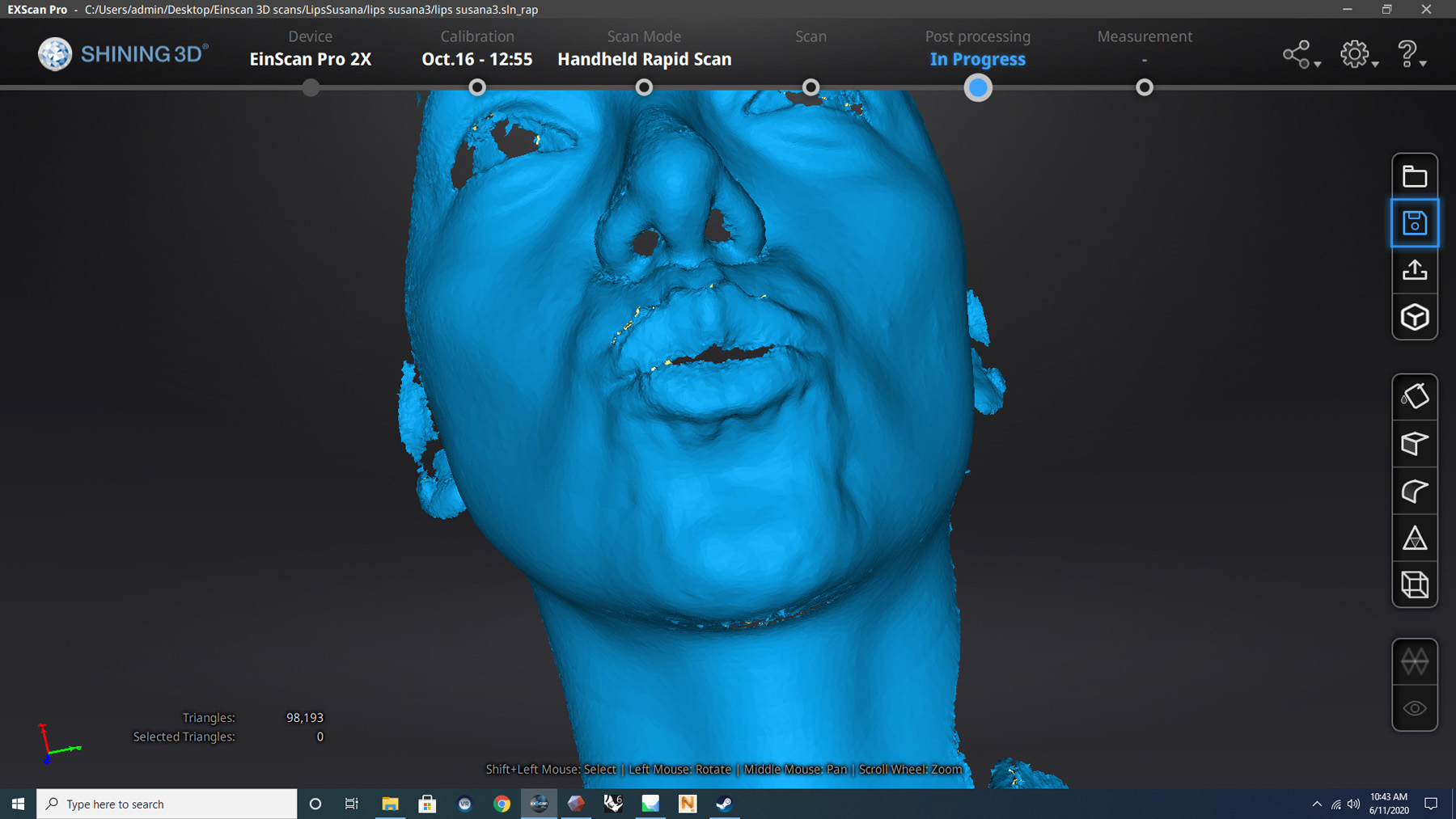

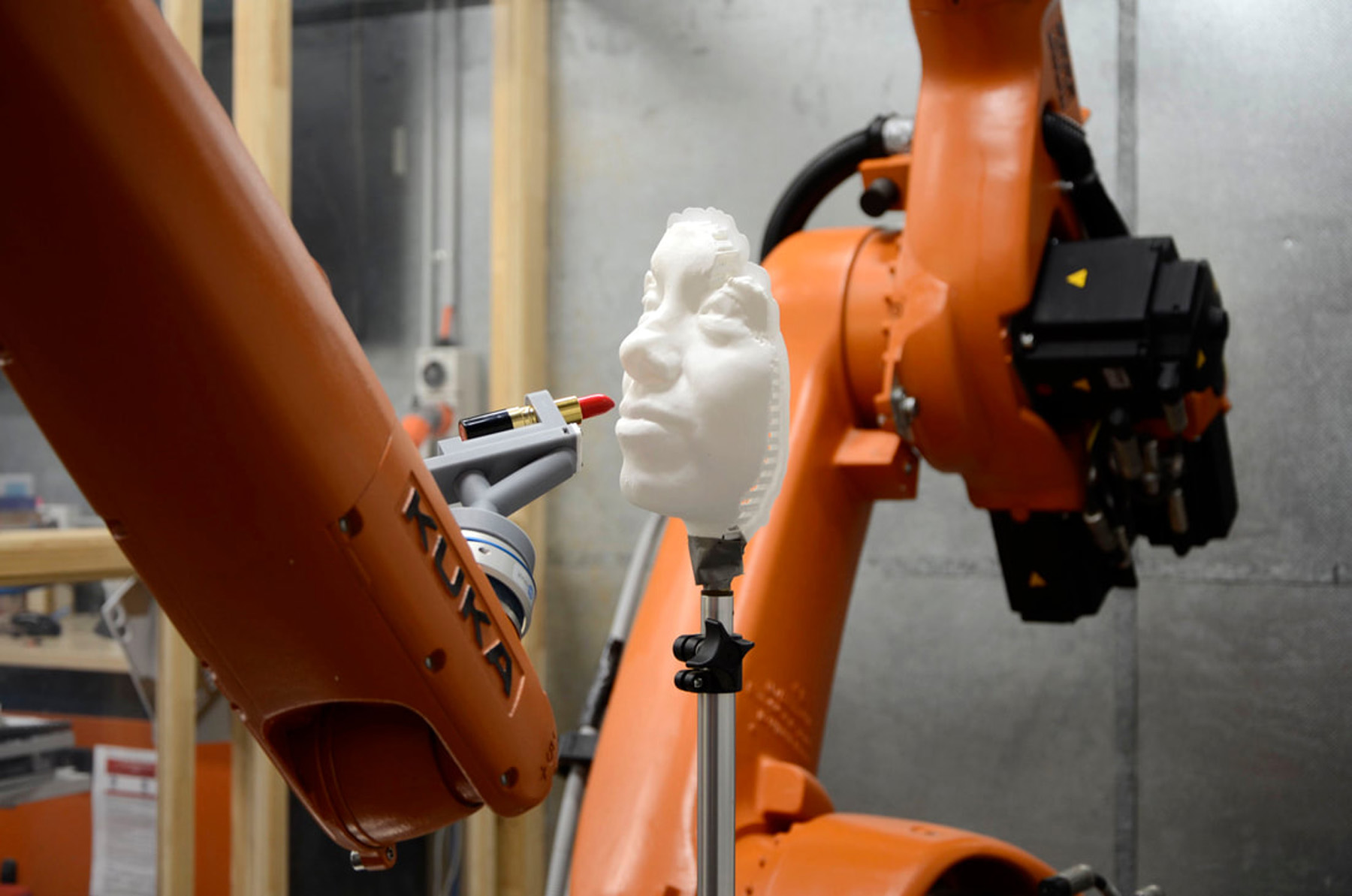

The research used a Kuka KR10 industrial robot for its range of motion dynamics and movement expression; and its capacity for precise, delicate gestures. Working with industrial robots poses challenges in relation to proximity and safety, with strict protocols to follow to ensure harm minimisation to humans working in close proximity. As a workaround, we scanned the human face and produced a 3D print as a proxy for the real human, combined in Rhino with a digital model of the KR10 robot to provide a simulation environment. The toolpath is scripted in GH/ KUKA|prc, and the robot then draws lipstick on the human face (or proxy), which is positioned very carefully to ensure calibration.In a first version, the robot paints the bottom lip twice, and then draws on the top lip from the centre to one side and then the other. The angle of the lipstick is varied along the path to achieve a better coverage, and more closely mimic how lipstick is applied by people. The 3D mesh of the scanned face and lips was used as a reference in figuring out the path and angle of the robot end effector, using the digital modelling environment of Rhino and the scripting tool of Kuka PRC in Grasshopper.

Future work pending ethics approval will invite anyone who wears lipstick to contribute both the gestural, tactile actions of applying lipstick, and the personal relationships and stories people have with their lipstick. This will provide a corpus of data for further exploration and experimentation into how a robotic arm can be programmed to draw lipstick in a nuanced way, that takes into account the unique variations of individual lips.

Project Credits: Concept: Dagmar Reinhardt and Lian Loke. Actors: Susana Alarcon Licona, Kuka KR10. Robot programming: Lynn Masuda. Technical Design: Susana Alarcon Licona, Dylan Wozniak-O’Connor. Cinematography and video edit: Paul Warren. Sound: Lindsay Webb.